The world of technology is filled with myths, misconceptions, and urban legends that have become an integral part of its folklore. These stories have been passed down through the years, often blurring the lines between fact and fiction. In this article, we'll delve into the 5 pillars of definitive tech mythos, exploring the origins, evolution, and impact of these enduring tales.

The Myth of the " Lone Genius"

One of the most pervasive myths in the tech world is that of the "lone genius" – the idea that a single individual, often working in isolation, can create revolutionary technology that changes the world. This myth has been perpetuated by stories of inventors like Thomas Edison, Alexander Graham Bell, and Steve Jobs, who are often credited with single-handedly creating groundbreaking innovations.

However, the reality is often more complex. Many of these "lone geniuses" had teams of researchers, engineers, and collaborators who contributed to their work. Moreover, the development of technology is often the result of a cumulative process, building upon the discoveries and innovations of others.

The Myth of " Moore's Law"

In 1965, Gordon Moore, co-founder of Intel, predicted that the number of transistors on a microchip would double approximately every two years, leading to exponential improvements in computing power and reductions in cost. This prediction, known as Moore's Law, has become a cornerstone of the tech industry, driving innovation and investment in semiconductor technology.

However, as we approach the physical limits of transistor miniaturization, it's becoming increasingly clear that Moore's Law is not a fundamental law of physics, but rather a self-fulfilling prophecy. The industry's ability to maintain this pace of innovation is facing significant challenges, and the future of computing may require new approaches and technologies.

The Myth of " Artificial Intelligence"

Artificial intelligence (AI) has become a buzzword in the tech industry, with many companies claiming to be working on AI-powered solutions. However, the reality is that true AI – that is, machines that can think and learn like humans – remains a distant goal.

Most of what is currently referred to as AI is actually machine learning, a subset of AI that involves training algorithms on large datasets to perform specific tasks. While machine learning has led to significant advances in areas like image recognition and natural language processing, it is still a far cry from true intelligence.

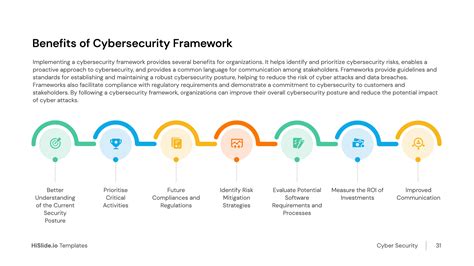

The Myth of "Cybersecurity"

The rise of cyber threats has led to a growing concern about cybersecurity, with many companies investing heavily in security measures to protect their networks and data. However, the reality is that cybersecurity is a myth – at least, in the sense that it implies a level of safety and security that is not currently possible.

The truth is that all systems are vulnerable to some extent, and the best that can be hoped for is to reduce the risk of a breach or attack. Moreover, the increasing complexity of modern systems, combined with the sophistication of modern threats, means that cybersecurity is a never-ending battle.

The Myth of "The Digital Divide"

The digital divide – the idea that there is a significant gap between those who have access to technology and those who do not – has been a topic of concern for many years. However, the reality is that the digital divide is not just a matter of access, but also of skills, literacy, and socioeconomic factors.

Moreover, the rise of mobile devices and social media has meant that many people who were previously excluded from the digital world now have access to technology. However, this access is often limited, and many people lack the skills and knowledge to use technology effectively.

Gallery of Tech Mythos

FAQ

What is the significance of the "lone genius" myth in tech?

+The "lone genius" myth has contributed to the notion that individual creativity and innovation are the primary drivers of technological progress. However, this myth overlooks the important role of collaboration, teamwork, and cumulative innovation in shaping the tech industry.

Is Moore's Law still relevant in today's tech industry?

+While Moore's Law has driven innovation and investment in semiconductor technology, its relevance is waning as the industry approaches the physical limits of transistor miniaturization. New approaches and technologies are needed to sustain progress in computing power and reductions in cost.

What are the limitations of artificial intelligence in its current state?

+Current AI systems are primarily based on machine learning, which involves training algorithms on large datasets to perform specific tasks. However, these systems lack true intelligence, creativity, and common sense, and are limited in their ability to generalize and adapt to new situations.

In conclusion, the 5 pillars of definitive tech mythos – the "lone genius," Moore's Law, artificial intelligence, cybersecurity, and the digital divide – have contributed to the complexity and mystique of the tech industry. By understanding the origins, evolution, and limitations of these myths, we can better appreciate the true nature of technological innovation and progress.